Before we get started with this week’s post…

Do you have a question related to leadership, management or coaching you’d like to see dissected and answered here in the Hagakure? If so, please email it to theweeklyhagakure (at) gmail (dot) com! 🙏

We are yelling at the weather.

Don’t take it from me. Take it from Aaron Dignan, founder at The Ready and Murmur:

Yelling at the weather sums up the reality of senior leadership in many companies, an illustration of what happens when we try to “fix” a complex system. And then nobody is happy.

So, what to do?

The default approach is to yell increasingly louder at the weather. Sometimes it feels like it works—the same way it gets sunny every once in a while in winter, regardless.

Another approach is to say that, if we’re being honest, the outcomes are not great. I know of companies with phenomenal employer branding where burnout is rampant at all levels. I know of places where, despite stellar quarterly earnings calls, both the senior leadership and the “rank & file” are beyond frustrated.

It is perhaps time to acknowledge that, despite some business results, if people are getting sick left and right, if so many are unhappy, yada yada yada, then maybe, just maybe, we’re not living up to our full business potential. Let alone the human one. Perhaps we are actually very, very far from that and just can’t see the forest for the trees.

Perhaps, then, it’s time to ask ourselves how we need to think and act differently rather than carry on yelling at the weather.

Mindset Shift

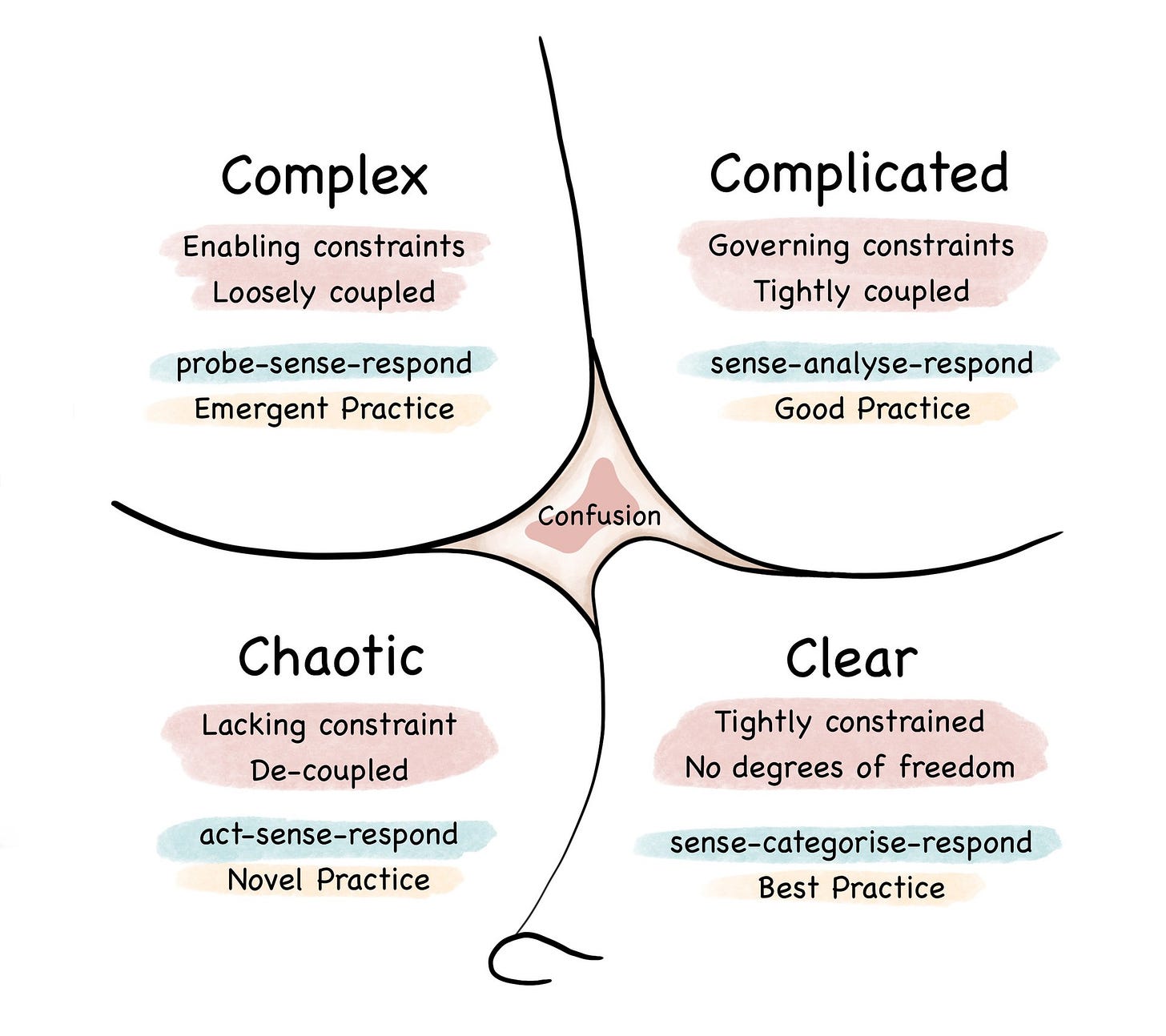

Dave Snowden and some of his colleague at IBM created the Cynefin Framework back in 1999, something that has been described as a “sense-making device.” And isn’t it true that a lot of the time we’re simply trying to “make sense” of things?

What insights can we gather from these data?

What will best solve this customer problem?

What system architecture should we design given our requirements?

How should we organize our teams?

How can we improve delivery?

How can we optimize our main database?

None of these are simple, clear problems. Some are complicated while others are complex. Can you try and guess which ones are which?

The Cynefin Framework helps us understand how to approach them.

The bottom line is that for complicated problems we know the questions but not the answers. We are, then, in a position to sense-analyse-respond: we seek expertise that can thoroughly analyse the situation and come up with a solid plan, based on accepted good practices, to be thoroughly executed. Because complicated problems are predictable (cause and effect are generally known a priori) this approach works very well.

But that recipe simply doesn’t work for complex problems. Cause and effect can only be understood in retrospect. So, despite having an idea of the outcomes we want (e.g. move metric X), we don’t even know what the right questions to ask are! In this scenario, no amount of analysis, or “perfect” requirements will suffice. We will always be surprised, and requirements will always change. According to Cynefin we must probe-sense-respond.

In other words, we must experiment our way through complexity and, best case, move some bits over time into the complicated domain.

Here’s the rub, though: experimentation embeds in itself the possibility of failure. And our quirky brains just hate that idea.

Systems of Accounting

Why do we intrinsically hate failure so much?

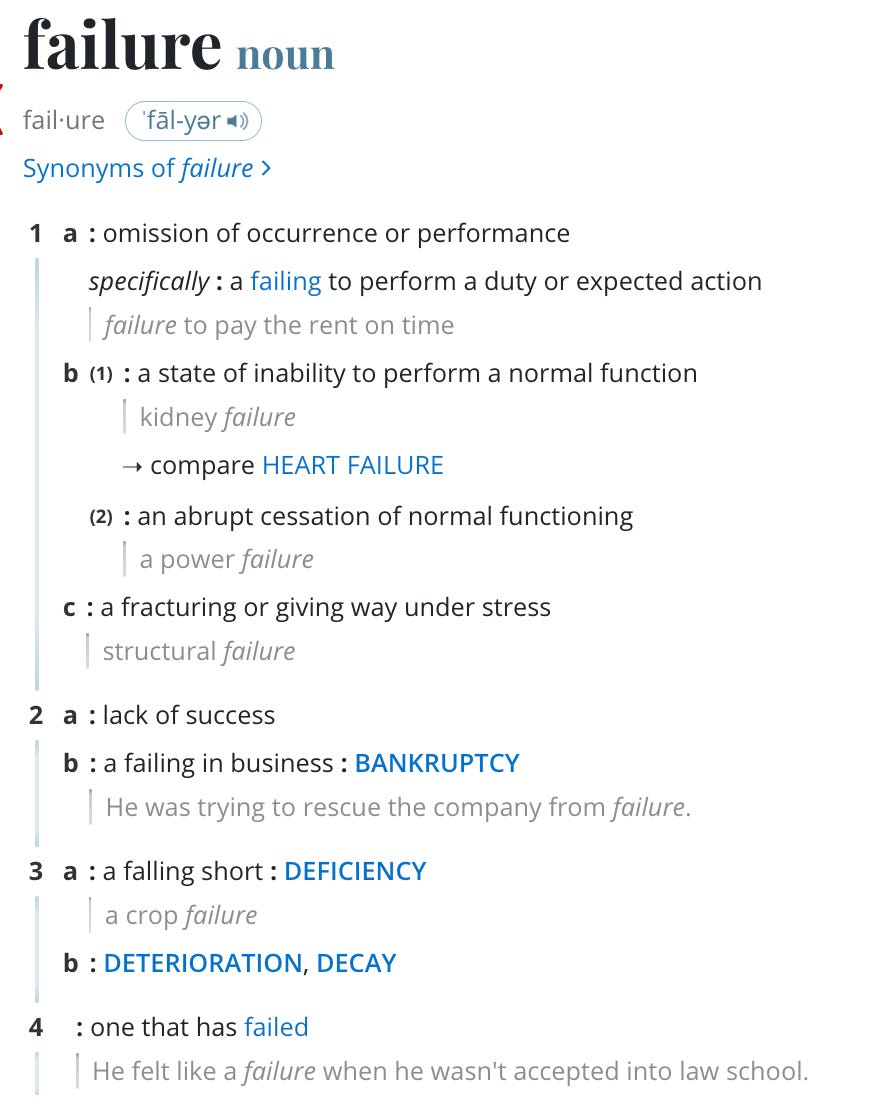

Part of the reason is how we frame it. The Merriam-Webster dictionary provides a bunch of definitions for “failure”, all of them tragic:

No wonder we don’t like failure that much. Kidney failure, structural failures, failed companies or feeling like a failure are all unpleasant to think about.

The Wikipedia page for failure also goes in the same direction, starting with:

Failure is the state or condition of not meeting a desirable or intended objective, and may be viewed as the opposite of success.

But wait, there’s hope. Right after, it states:

The criteria for failure depends on context, and may be relative to a particular observer or belief system.

Ah. Now we’re getting somewhere. It depends on context, observer, belief system. Hold that thought. Let’s take a quick detour to observe what the prevailing belief system tends to be.

Russell L. Ackoff was a phenomenal organizational theorist and systems thinker. In an article published on The Systems Thinker, he claims:

I have no interest in forecasting the future, only in creating it by acting appropriately in the present. I am a founding member of the Presentology Society.

While we all have a sense that we must act appropriately in the present, we nevertheless struggle to understand what the best course of action is. Increasingly, we’ve turned to data for the answers. Being “data-driven” became a badge of honor, with its allure of removing all fuzziness from the equation. The gut is said to be unreliable, emotions soft. Business needs predictability and a hard edge. It needs facts, not feelings.

Ackoff died in 2009 but he had for a long time been keenly aware of the errors of our ways. In the same article, he writes:

Identifying and defining the hierarchy of mental content, which, in order of increasing value, are: data, information, knowledge, understanding, and wisdom. However, the educational system and most managers allocate time to the acquisition of these things that is inversely proportional to their importance. Few individuals, and fewer organizations, know how to facilitate and accelerate learning—the acquisition of knowledge—let alone understanding and wisdom. It takes a support system to do so.

It is this facilitation of learning that is conspicuously missing from most organizations. Our obsession with execution, and our lax attitude towards the value of time and attention, have led to incredible amounts of work in progress that completely rob us of time to think, learn, and improve. As a consequence we don’t thrive, we merely survive. And most don’t even notice that’s the case.

Ackoff gets to the heart of the matter:

All learning ultimately derives from mistakes. When we do something right, we already know how to do it; the most we get out of it is confirmation of our rightness. Mistakes are of two types: commission (doing what should not have been done) and omission (not doing what should have been done). Errors of omission are generally much more serious than errors of commission, but errors of commission are the only ones picked up by most accounting systems. Since mistakes are a no-no in most corporations, and the only mistakes identified and measured are ones involving doing something that should not have been done, the best strategy for managers is to do as little as possible. No wonder managerial paralysis prevails in American organizations.

You see the conundrum? All learning ultimately derives from mistakes. But we’re afraid of making mistakes, and we demonize them. We seek the “tried and true” without realizing that there’s none to be found whenever we’re attempting something essentially new.

And when we can’t find it, we resort to inaction.

Reframing Failure

Failure is all that bad stuff AND also the price of admission for success. Failure is many things. Failure is nuanced.

While it’s easy to nod and pay lip service to this, fomenting a culture where failure is lived this way is where the money is. Quite literally.

Professor Amy C. Edmondson, of “psychological safety” fame, has written extensively about fearless organizations—places where failure is not demonized but rather understood, and leveraged. In a 2011 Harvard Business Review essay, she lists an array of reasons for failure, ranging from blameworthy to praiseworthy:

Notice how the more complexity and uncertainty we have, the more we must accept that failure will happen. Hypothesis/exploratory testing inherently means failure is a possible—and natural—outcome. And that’s not only OK but praiseworthy, as long as we learn from it.

I know of no better way to influence culture than by making the implicit explicit: don’t assume people know what’s inside your head as a leader. Instead, if building a culture where exploiting failure as a competitive advantage is a top goal, use every communication channel at your disposal (all hands, 1:1s, staff meetings, retrospectives, weekly email updates), to repeatedly hammer that message.

What We Do Is Who We Are

However, saying that failure is the cost of discovery and learning won’t suffice. What you do and what you reward matters, a lot.

With this in mind, there’s a few pressure points to progressively create a culture that embraces failure:

Hiring. A high-performing team starts with hiring. What do you look for in candidates? A combination of curiosity, humility, and learning ability, tempered with a generous splash of risk-taking makes for a suitable employee in a learning, generative culture.

Onboarding. Remember: make the implicit explicit. Presuming you did a good job hiring people who are suited for experimentation and learning, inject this message into their onboarding process. It boils down to “failure when experimenting is only problematic if we don’t learn from it.”

Performance assessment. What does your career ladder (or whatever fancy name you use) say about this? Say what you will, but if you have one, chances are compensation, promotions, and status are tied to it. That’s a big incentive. Make sure you are incentivizing a culture of embracing failure to learn in there.

It’s important to note that everyone can do their part in building this culture. If you’re a first line engineering manager, leading a single team, you have an opportunity to create a cocoon of safety—in the way you speak, in what you highlight, and in what you encourage.

For example, every team retrospective can lead to a small experiment with how the team works, small as it may be. Holding everyone—including yourself—accountable for running and learning from that experiment is something completely within your power.

Rejecting Futurology

We must get out of the business of prediction and into the business of probing for what works and what doesn’t. The faster we learn, the better we get, and the more successful we are.

Probing in a complex environment happens best through safe-to-fail experiments. These are small scale, contained experiments with the intent of approaching issues from different angles, allowing emergent possibilities to become more visible. The emphasis is not on ensuring success or avoiding failure, but in allowing ideas that are not useful to fail in small, contained, and tolerable ways.

Safe-to-fail experiments are also not just random stabs in the dark. They have:

A way of knowing it’s succeeding. What’s at least one example that would indicate that this experiment is working?

A way of knowing it’s failing. How will we know if it’s not working?

A way of dampening it. If it’s not working, how can we easily stop it and attenuate it?

A way of amplifying it. If it is working, what’s the path to expanding it?

Coherence. Do we have a realistic reason for thinking this experiment can have a positive impact? Do we have enough human energy behind it?1

A familiar example is running A/B tests for product discovery. A well constructed A/B test has an hypothesis which indicates the conditions for success and failure. It’s easy to stop it if it’s failing badly, and there’s usually a path to amplifying it (e.g. roll it out to all users).

Where most leaders and managers get tripped up is to not apply the same thinking and methodology to teams and the organization as a whole. Change is hard, but we make it harder when we implicitly frame it as permanent. In a generative culture of learning, everything is an experiment. That doesn’t mean it’s a hack, but rather a deliberate test to make us smarter than we currently are.

By the same token, one of the biggest challenges I observe in my clients is navigating the tension between engineering, product management, and business stakeholders—particularly regarding estimations and deadlines. While I won’t go down the rabbit hole of #NoEstimates and the like, we can at least observe that there is still a widespread dysfunction between these functions when it comes to, again, futurology.

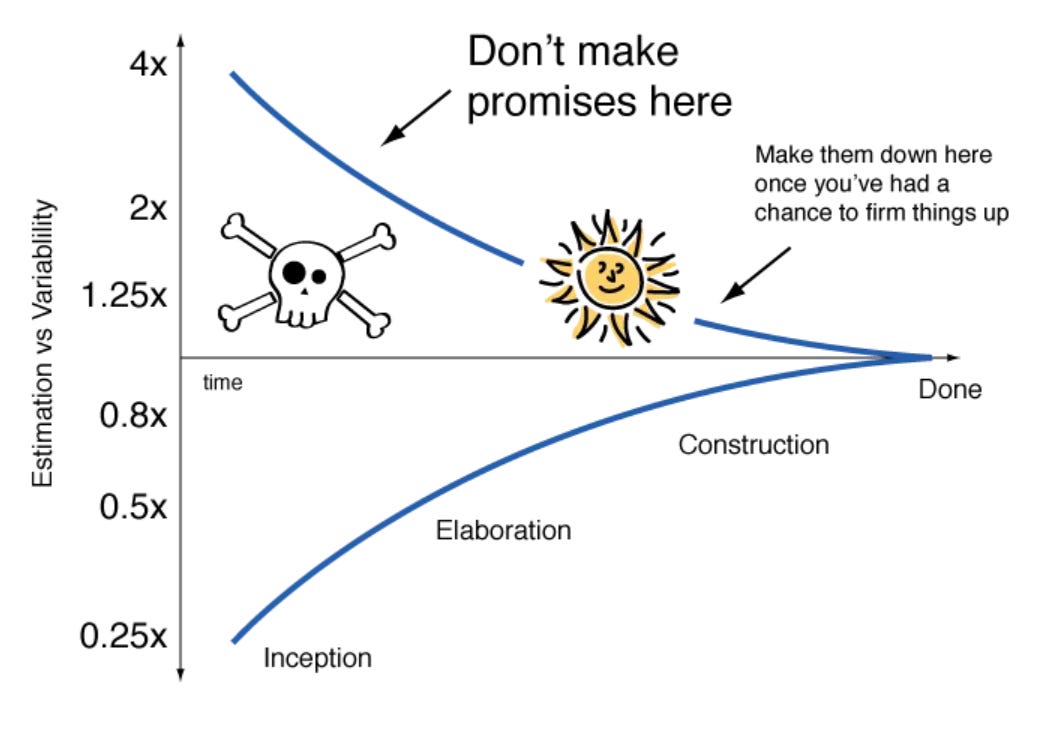

The famous “Cone of Uncertainty” comes in handy:

I predict that if you take a step back, bring your stakeholders together, and plop this picture on the table as a conversation starter, fruitful discussions will happen. Bridges can start being built.

But don’t stop there. The cone of uncertainty won’t become a cone all by itself—it requires safe-to-fail experiments so we can learn more, predict less, and engender confidence among everyone involved.

A great example of a safe-to-fail experiment in this context is a “spike”, an approach spawned in the context of Extreme Programming. A spike is a simple way of exploring potential technical solutions. Importantly, the XP website points out:

Build the spike to only addresses the problem under examination and ignore all other concerns. Most spikes are not good enough to keep, so expect to throw it away. The goal is reducing the risk of a technical problem or increase the reliability of a user story's estimate.2

This is exactly how we narrow down the cone of uncertainty.

The ideas of reducing risk, delaying decisions until the last responsible moment, learning through experimentation, and creating shared understanding with stakeholders is how organizations and teams live up to their real potential and become “high-performance.”

Yelling at the weather? Not so much.

TL;DR

Understand that complexity requires a different mindset and approach in order to bring about positive change.

Think of ways to facilitate learning rather than merely executing. The former makes you better and better, the latter not so much.

Reframe failure in more nuanced terms. In complexity, failure is the price of admission to discover the path to success.

Create a culture of experimentation and learning by embedding the mindset and practices in everything you say and you do, from hiring to onboarding to performance management. Repeat it often, and reward it when it happens.

Instead of embarking in futurology exercises, design small scale, safe-to-fail experiments to learn your way forward. Maintain close feedback loops about the results of this process with stakeholders rather than promising something you can’t necessarily deliver on.

Thanks for reading. If you enjoyed this post, please consider hitting the ❤️ button, and sharing it using the button below.

Until next week, have a good one! 🙏

Liz Keogh, who I quoted in an earlier post, explains safe-to-fail probes exceedingly well. Here’s an example.

It also states, “When a technical difficulty threatens to hold up the system's development put a pair of developers on the problem for a week or two and reduce the potential risk.” Breaks my heart how little pair programming there is, broadly speaking, in the industry.